はじめに

前回は、点群データの合成を行いましたが、きれいに合成はできませんでした。

そこで今回は、合成できる条件を検証していきます。

前提条件

前提条件は、以下の通りです。

- pyrealsense2

- OpenCV ==4.6.0

- Python == 3.9.13

- Windows11

- open3D = 0.16.0

点群データの用意

キャプチャ用のプログラムを掲載しておきます。

“c” を押すと capture フォルダに点群データが保存されます。

import pyrealsense2 as rs

import numpy as np

import cv2

import open3d as o3d

import datetime

# Configure depth and color streams

pipeline = rs.pipeline()

config = rs.config()

config.enable_stream(rs.stream.depth, 640, 480, rs.format.z16, 30)

config.enable_stream(rs.stream.color, 640, 480, rs.format.bgr8, 30)

# Start streaming

pipeline.start(config)

align = rs.align(rs.stream.color)

vis = o3d.cuda.pybind.visualization.Visualizer()

vis.create_window('PCD', width=640, height=480)

pointcloud = o3d.cuda.pybind.geometry.PointCloud()

geom_added = False

number = 1

while True:

dt0 = datetime.datetime.now()

frames = pipeline.wait_for_frames()

frames = align.process(frames)

profile = frames.get_profile()

depth_frame = frames.get_depth_frame()

color_frame = frames.get_color_frame()

if not depth_frame or not color_frame:

continue

# Convert images to numpy arrays

depth_image = np.asanyarray(depth_frame.get_data())

color_image = np.asanyarray(color_frame.get_data())

o3d_color_image = cv2.cvtColor(color_image, cv2.COLOR_BGR2RGB)

img_depth = o3d.geometry.Image(depth_image)

img_color = o3d.geometry.Image(o3d_color_image)

rgbd = o3d.geometry.RGBDImage.create_from_color_and_depth(img_color, img_depth, convert_rgb_to_intensity=False)

intrinsics = profile.as_video_stream_profile().get_intrinsics()

pinhole_camera_intrinsic = o3d.camera.PinholeCameraIntrinsic(intrinsics.width, intrinsics.height, intrinsics.fx, intrinsics.fy, intrinsics.ppx, intrinsics.ppy)

pcd = o3d.cuda.pybind.geometry.PointCloud.create_from_rgbd_image(rgbd, pinhole_camera_intrinsic)

pcd.transform([[1, 0, 0, 0], [0, -1, 0, 0], [0, 0, -1, 0], [0, 0, 0, 1]])

voxel_down_pcd = pcd.voxel_down_sample(voxel_size=0.005)

alpha = 0.015

mesh = o3d.cuda.pybind.geometry.TriangleMesh.create_from_point_cloud_alpha_shape(voxel_down_pcd, alpha)

mesh.compute_vertex_normals()

pointcloud.points = pcd.points

pointcloud.colors = pcd.colors

if geom_added == False:

vis.add_geometry(pointcloud)

geom_added = True

vis.update_geometry(pointcloud)

vis.poll_events()

vis.update_renderer()

cv2.imshow('bgr', color_image)

key = cv2.waitKey(1)

if key == ord('q'):

break

elif key == ord("c"):

o3d.io.write_triangle_mesh("./capture/capture-"+str(number)+".ply", mesh)

number += 1

process_time = datetime.datetime.now() - dt0

print("FPS: " + str(1 / process_time.total_seconds()))

pipeline.stop()

cv2.destroyAllWindows()

vis.destroy_window()

del visこちらを使用して、点群データを 5つ 程作成してください。

データの確認

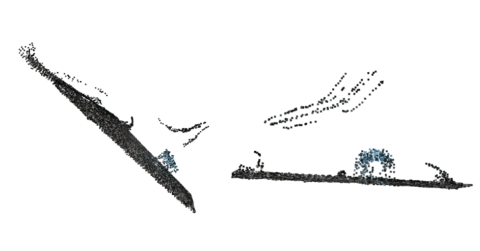

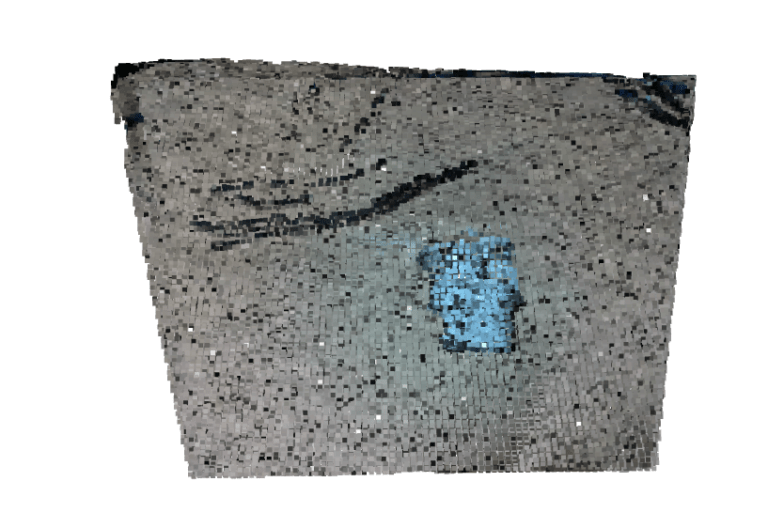

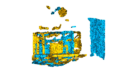

撮像した点群データを確認します。

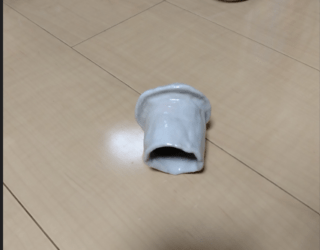

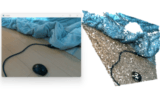

撮影対象はこちらです。

確認用プログラムを掲載しておきます。

import numpy as np

import open3d as o3d

import glob

pcd_ = glob.glob("./capture/*.ply")

pcd_list = []

for p in pcd_:

pcd = o3d.io.read_point_cloud(p)

pcd_list.append(pcd)

print(pcd)

np_pcd = np.asarray(pcd.points)

print(np_pcd, np_pcd.shape)

o3d.visualization.draw_geometries(pcd_list)

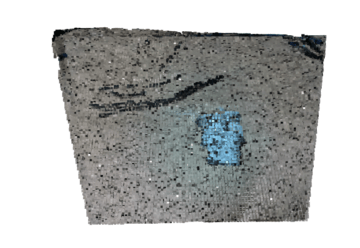

このように、5つの点群データは少しずつずれています。

点群データの合成

合成プログラムを掲載しておきます。

# ----------------------------------------------------------------------------

# - Open3D: www.open3d.org -

# ----------------------------------------------------------------------------

# The MIT License (MIT)

#

# Copyright (c) 2018-2021 www.open3d.org

#

# Permission is hereby granted, free of charge, to any person obtaining a copy

# of this software and associated documentation files (the "Software"), to deal

# in the Software without restriction, including without limitation the rights

# to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

# copies of the Software, and to permit persons to whom the Software is

# furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in

# all copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

# FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS

# IN THE SOFTWARE.

# ----------------------------------------------------------------------------

"""Align multiple pieces of geometry in a global space"""

import open3d as o3d

import numpy as np

import glob

def load_point_clouds(voxel_size=0.0):

pcd_data = glob.glob("./capture/*.ply")

#pcd_data = ["./dataset/output-1.ply","./dataset/output-2.ply","./dataset/output-3.ply"]

pcds = []

for i in range(len(pcd_data)):

print(i)

pcd = o3d.io.read_point_cloud(pcd_data[i])

pcd_down = pcd.voxel_down_sample(voxel_size=voxel_size)

pcds.append(pcd_down)

return pcds

def pairwise_registration(source, target, max_correspondence_distance_coarse,

max_correspondence_distance_fine):

print("Apply point-to-plane ICP")

icp_coarse = o3d.pipelines.registration.registration_icp(

source, target, max_correspondence_distance_coarse, np.identity(4),

o3d.pipelines.registration.TransformationEstimationPointToPlane())

icp_fine = o3d.pipelines.registration.registration_icp(

source, target, max_correspondence_distance_fine,

icp_coarse.transformation,

o3d.pipelines.registration.TransformationEstimationPointToPlane())

transformation_icp = icp_fine.transformation

information_icp = o3d.pipelines.registration.get_information_matrix_from_point_clouds(

source, target, max_correspondence_distance_fine,

icp_fine.transformation)

return transformation_icp, information_icp

def full_registration(pcds, max_correspondence_distance_coarse,

max_correspondence_distance_fine):

pose_graph = o3d.pipelines.registration.PoseGraph()

odometry = np.identity(4)

pose_graph.nodes.append(o3d.pipelines.registration.PoseGraphNode(odometry))

n_pcds = len(pcds)

for source_id in range(n_pcds):

for target_id in range(source_id + 1, n_pcds):

transformation_icp, information_icp = pairwise_registration(

pcds[source_id], pcds[target_id],

max_correspondence_distance_coarse,

max_correspondence_distance_fine)

print("Build o3d.pipelines.registration.PoseGraph")

if target_id == source_id + 1: # odometry case

odometry = np.dot(transformation_icp, odometry)

pose_graph.nodes.append(

o3d.pipelines.registration.PoseGraphNode(

np.linalg.inv(odometry)))

pose_graph.edges.append(

o3d.pipelines.registration.PoseGraphEdge(source_id,

target_id,

transformation_icp,

information_icp,

uncertain=False))

else: # loop closure case

pose_graph.edges.append(

o3d.pipelines.registration.PoseGraphEdge(source_id,

target_id,

transformation_icp,

information_icp,

uncertain=True))

return pose_graph

if __name__ == "__main__":

voxel_size = 0.005

pcds_down = load_point_clouds(voxel_size)

o3d.visualization.draw(pcds_down)

print("Full registration ...")

max_correspondence_distance_coarse = voxel_size * 15

max_correspondence_distance_fine = voxel_size * 1.5

with o3d.utility.VerbosityContextManager(

o3d.utility.VerbosityLevel.Debug) as cm:

pose_graph = full_registration(pcds_down,

max_correspondence_distance_coarse,

max_correspondence_distance_fine)

print("Optimizing PoseGraph ...")

option = o3d.pipelines.registration.GlobalOptimizationOption(

max_correspondence_distance=max_correspondence_distance_fine,

edge_prune_threshold=0.25,

reference_node=0)

with o3d.utility.VerbosityContextManager(

o3d.utility.VerbosityLevel.Debug) as cm:

o3d.pipelines.registration.global_optimization(

pose_graph,

o3d.pipelines.registration.GlobalOptimizationLevenbergMarquardt(),

o3d.pipelines.registration.GlobalOptimizationConvergenceCriteria(),

option)

print("Transform points and display")

for point_id in range(len(pcds_down)):

print(pose_graph.nodes[point_id].pose)

pcds_down[point_id].transform(pose_graph.nodes[point_id].pose)

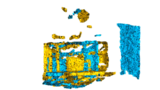

o3d.visualization.draw(pcds_down)上記を実行すると、以下の出力が得られます。

最初に表示される window は元データです。

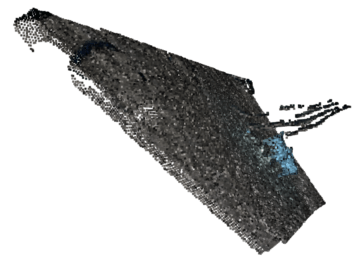

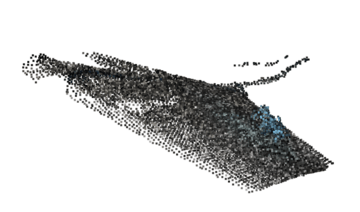

1s 以下の計算後、以下の点群データが表示されます。

非常に精度良く合成できました!

おわりに

今回は、点群データの合成を前回よりも精度向上させて実行しました。

前回と比較すると、かなり精度良くなりました。

これを利用して次回は、YOLOv5と連携させていきます。

コメント